RobotGPT on the way and Tulsa attempts to rise | Intent, 0007

Google's new RT-2 vision-language-action model and the results of the Tulsa Remote program

Intent is all about helping talent in tech become more intentional with their career by becoming more informed, more fluent, and more aware about the goings-on within tech and adjacent industries. We welcome your feedback!

On today’s agenda:

Tulsa’s attempt to attract remote workers pays off

Google's new R2-D2... sorry, RT-2 could be an LLM-like revolution for robotic

An update on Tulsa’s attempt to force itself into a tech hub

Midjourney: tulsa skyline, emerging skyscrapers, bright

Between 2020 and 2022, two million people left America’s largest cities. Today, some half of New York’s office buildings sit empty. As of now, commuting in large cities has stabilized at about 60% of pre-pandemic levels. Where did everyone go?

The data is still changing around overall trends, but one beneficiary is what we might call “tertiary cities.” There are prime stops like New York and San Francisco. Then, we have our secondary metros like Austin, Miami, and Nashville. In the tertiary spot is “everyone else who is trying.”

And a surprising contender for a tertiary slot? Tulsa, Oklahoma.

In 2018, the city started its efforts with Tulsa Remote. It offers $10k to remote workers who move to Tulsa and stay for at least 12 months, and now boasts over 2.5k members. A third-party report on the program estimates that every $1 spent on the program has brought $13 in economic activity to the region.

It was reported earlier this year that 90% have remained in Tulsa beyond the one-year commitment. A study published by the Brookings Institution found that people in the program had a higher chance of staying in the community long-term and had higher levels of community engagement than those who did not join.

Now, the Tulsa Innovation Labs (TIL) nonprofit has stepped in to catalyze the local tech economy. Run by the George Kaiser Family Foundation, which also sponsored Tulsa Remote, the program played a huge role in securing $38.2 million in federal funding for local innovation. A key initiative is attracting talent in 4 different sectors – energy tech, healthtech, cybersecurity, and advanced air mobility. They’re also turning their focus to recruiting skilled tech workers fleeing Ukraine.

TIL released its first-ever impact report and found that:

TIL is on pace to create or place up to 10K tech-related jobs in 8 years.

Job growth from TIL is estimated to increase Tulsa’s tech employment by 5% annually

TIL initiatives are poised to catalyze over $1B in additional public and private capital.

In the next eight years, TIL initiatives will draw nearly 150 new companies to the region.

Tulsa might be building a blueprint for midwest tech incubation, drawing the coastal-dwellers that usually roll their eyes. Beyond attracting remote workers, there has been some early startup creation, too:

BillionMinds ($1.7M funded) is an upskilling platform for remote workers, allowing companies to offer skill-building workshops and classes.

Volt ($3.3M funded) allows businesses to comply with government regulations when it comes to their SMS marketing, and get analytics to monitor their efforts.

PatchRx ($1.6M funded) is a remote therapeutic management platform that helps patients take medication on time

Some cities have begun to replicate the model (including Newton, IA, Harmony, MN, and Nobesville, IN). There’s even a startup called MakeMyMove ($2.6M funded), which seeks to connect remote workers to new places to live. It hosts a whole slew of offers from small towns and cities that seek to attract new talent.

All these hubs are not free from the side effects of tech development, though – another growing hub, Charlotte, NC’s housing prices have gone up 54%, and Tulsa’s have gone up by 47% on average. This month, residents are expected to vote on whether to commit $75M toward new housing initiatives, which is a good proxy for whether they, too, see the benefits from this increased migration.

One thing is for sure, though – whether the growth is sustained or not, Tulsa is making a name for the flyover states, and that’s something to talk about.

Job searching? We’re built to help

Check out CareerMakers, by Free Agency. It’s the job search prep program made for tech people, by tech people.

We’ve helped candidates negotiate over $320M in compensation since 2019 — supercharge your job search and get started now.

How Google’s new RT-2 could bring us general-purpose robots

Midjourney: assembly line robot with arms, bright room, clean, award-winning, depth of field --ar 4:1 --v 5.1

Recently, Google DeepMind announced Robotic Transformer 2 (RT-2), a new vision-language-action (VLA) model that helps robots easily understand and perform actions. We’ll get into the software that enables it, some known players in the robotics space who might be competitors, and some smaller startups who might be able to leverage RT-2 going forward.

So what is RT-2?

In short, RT-2 is a first-of-its-kind neural network-based model (Transformer-based) that’s designed to inform, enable, and output robotic actions. It is not a robot itself — think of it as an LLM, but instead of text inputs and outputs, the RT-2 model is trained on knowledge from web data to inform physical actions and decisions.

The goal is to enable the creation and adoption of more “general-purpose” robots for everyday use, as opposed to most usable robots in use today – which tend to be hyper-specific for manufacturing or laughably bad (there’s even a whole subreddit dedicated to them).

How (and why) it works

Until now, many general-purpose robots have needed detailed instructions to execute a task — “move forward 3 meters," "lower arm 20 degrees," "close grip 30%,” just to pick up an object. And robots that have been more advanced require expensive and time-consuming training on billions of data points across objects, tasks, and situations.

Here’s how Google defines the before and after:

“For example, if you wanted previous systems to be able to throw away a piece of trash, you would have to explicitly train them to be able to identify trash, as well as pick it up and throw it away. Because RT-2 is able to transfer knowledge from a large corpus of web data, it already has an idea of what trash is and can identify it without explicit training. It even has an idea of how to throw away the trash, even though it’s never been trained to take that action.”

This allows a robot to take in data, images, and language from the world around it to make accurate assumptions and self-direct required actions. The RT-2 language model processes information and represents actions as tokens (if that sounds similar to an NLP, it’s because it is), representing the output as action strings.

Here’s how Google themselves explain it:

“Inspired by chain-of-thought prompting methods used in LLMs, we probed our models to combine robotic control with chain-of-thought reasoning to enable learning long-horizon planning and low-level skills within a single model.

In particular, we fine-tuned a variant of RT-2 for just a few hundred gradient steps to increase its ability to use language and actions jointly. Then we augmented the data to include an additional ‘Plan’ step, first describing the purpose of the action that the robot is about to take in natural language, followed by “Action” and the action tokens.”

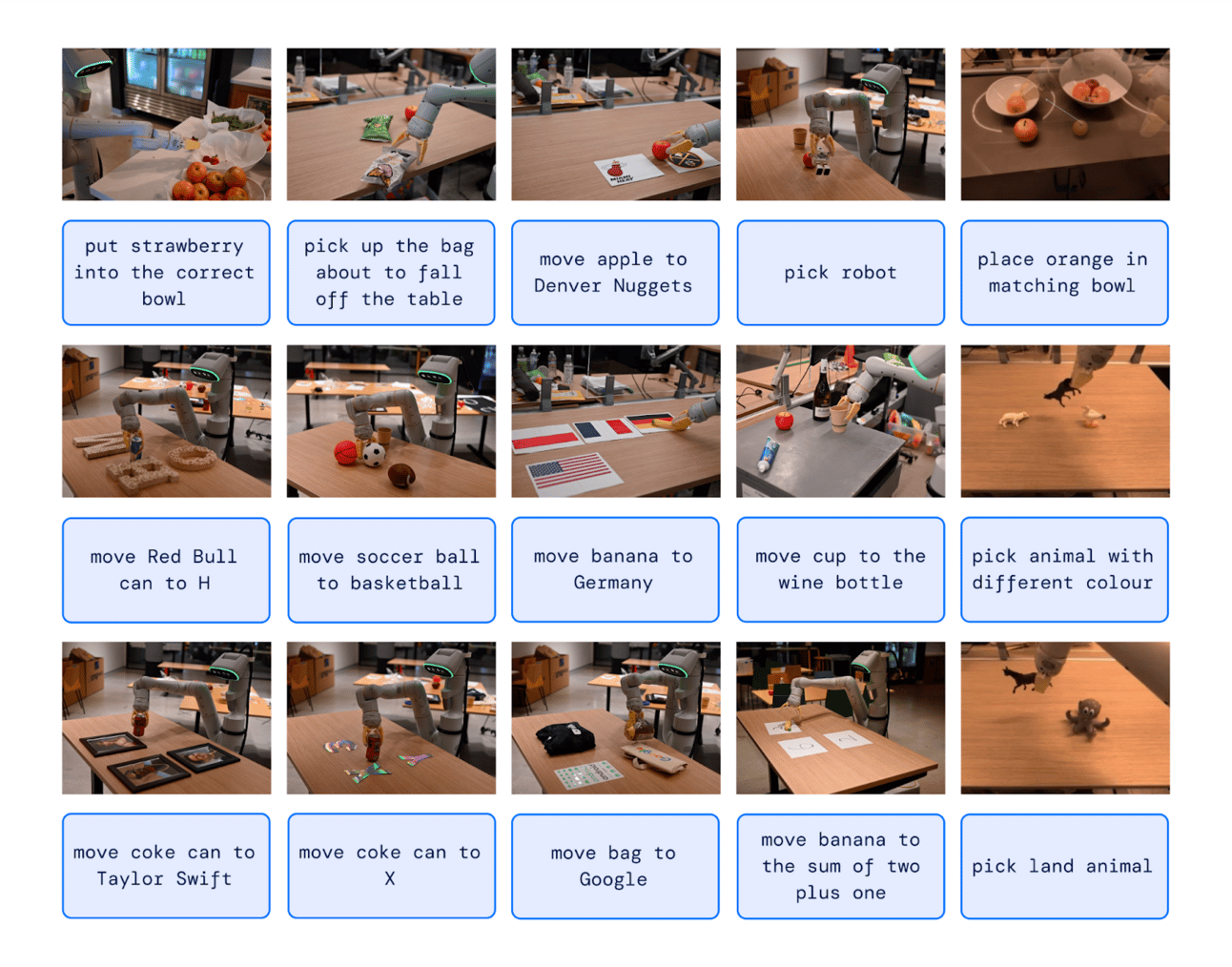

Because of the robot’s understanding of “visual-semantic concepts,” it allows the user to give commands that enable a lot more discretion instead of specific instructions, like in the image below:

Courtesy of Google DeepMind

Google claims to have seen a 3x improvement over previous pre-trained models in categories like symbol understanding, reasoning, and human recognition. It’s all broken down into Google DeepMind’s blog post about the announcement — there are a lot more details than we can fit into this post, so we recommend checking it out.

Other major robotics players

Here’s a quick “where are they now” on the robotics companies you might not have heard from recently:

Who might stand to gain from RT-2’s development?

We’ve come a long way from the days of the voice-activated “Hey R2” Star Wars toy from some of our childhoods — these are some of the earlier-stage players in robotics that you might not have heard of, but could be making waves soon enough.

Energy Robotics ($4.9M funded) is “robots as a service,” or RaaS. They’re automating inspection across oil and gas, chemical, and utility plants, providing inspectors with rented fleets of robots.

Voliro ($2.2M funded) is building multi-purpose robotic drones to provide aerial inspections of active construction sites.

Modular Robotics ($11.8M funded) develops “Cubelets,” which are robot blocks designed to teach problem-solving skills and assist in everyday tasks. The National Science Foundation has been a major investor in Modular.

Magazino ($49.3M funded) creates autonomous mobile robots for e-commerce fulfillment companies, as well as industrial robots for production and heavy load management.

Harvest Automation ($33.6M funded) specializes in building robots for material handling in agriculture — they’re used to automate labor-intensive and physically demanding tasks for nurseries, greenhouses, and farms.

Simbe Robotics ($26M funded) automates retail’s repetitive tasks, and is currently being used in more than 100 stores by grocery chains in the US.

Robust AI ($42.5M funded) is a platform tool used to reduce the setup time for robotics systems using a cognitive engine. Will they utilize RT-2, or will it make them obsolete?

Think a friend could use a dose of Intent?

Thanks for reading! Forward this along to your least-informed friend in tech. We love the attention. ;)

And as always, let us know what you think! If you aren't subscribed, what're you doing!? Click here!

Sent with Intent,

Free Agency