AI chat, agent, browser, or operating system? | Intent, 0028

Also: Gavin Newsom and Ted Cruz take on tech.

Intent exists to help tech talent become more informed, more fluent, and more aware of the forces shaping their careers. We welcome feedback – just hit reply.

The agenda ahead

OpenAI, Google DeepMind, and Perplexity reveal two new default interaction surfaces for the future of AI

Gavin Newsom and Ted Cruz start hinting at new regulatory needs with first shots at new tech frameworks

Small bites across venture dollars, the space race, and AI infrastructure

But first, a quick programming note —

Free 30m session: How to Use Claude Code (or Codex CLI) for Non-Code Use Cases, RSVP here to attend live or receive the recording

Free 30m session: AI for PMs: 10x Your Design Exploration, RSVP here to attend live or receive the recording

Two default surfaces: chat wants to be the OS, the browser wants to be chat

For the past few decades, we’ve built around two places where users “lived” and displayed intent: Google (search) and social media.

This week’s AI announcements have shifted the move-forward picture — we’ll be increasingly building for the chat window and the agentic browser. Let’s dive in.

First, the announcements that signaled the shift:

OpenAI — as we talked about earlier this week, apps (like Spotify, Canva, Zillow) now run inside ChatGPT via a new Apps SDK, with Instant Checkout powered by an Agentic Commerce Protocol.

Google Gemini — just launched Gemini CLI extensions, a framework to connect their CLI agent to third party developer tools (ex. Dynatrace, Elastic, Postman, Stripe)

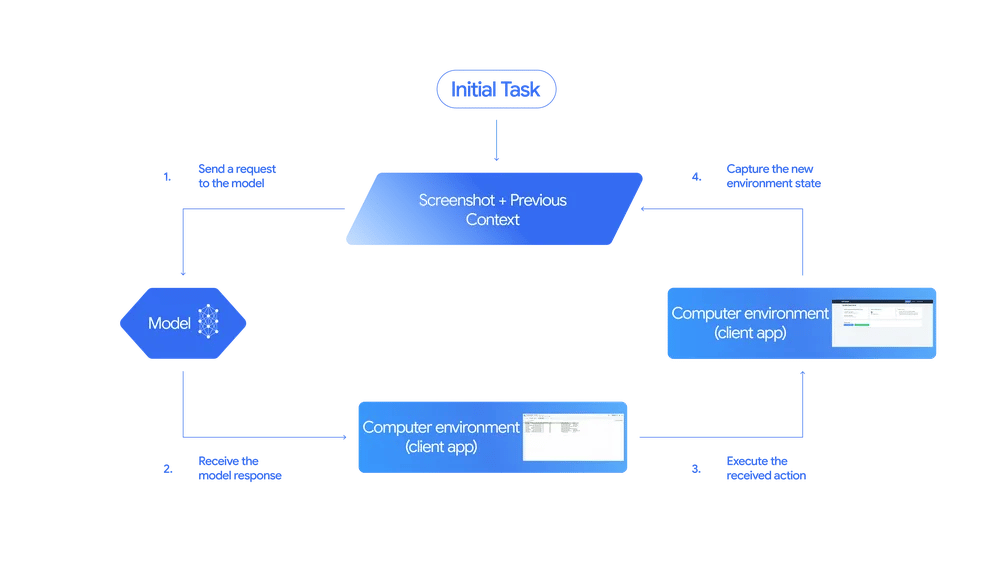

Google DeepMind — Gemini 2.5 Computer Use hit preview in the Gemini API and Vertex AI, letting agents “see” screenshots and emit concrete UI navigation actions to drive a browser like a human.

Perplexity — Comet, an AI browser that keeps the agent inside your navigation, is now free to everyone.

Okay, what are the implications?

If the last generation of interfaces was about getting you to type a search query or connect with a friend, the new generation seems to want you to (1) chat your way to accomplish things outside the interface and (2) chat your way into controlling the interface itself.

Paradigm 1

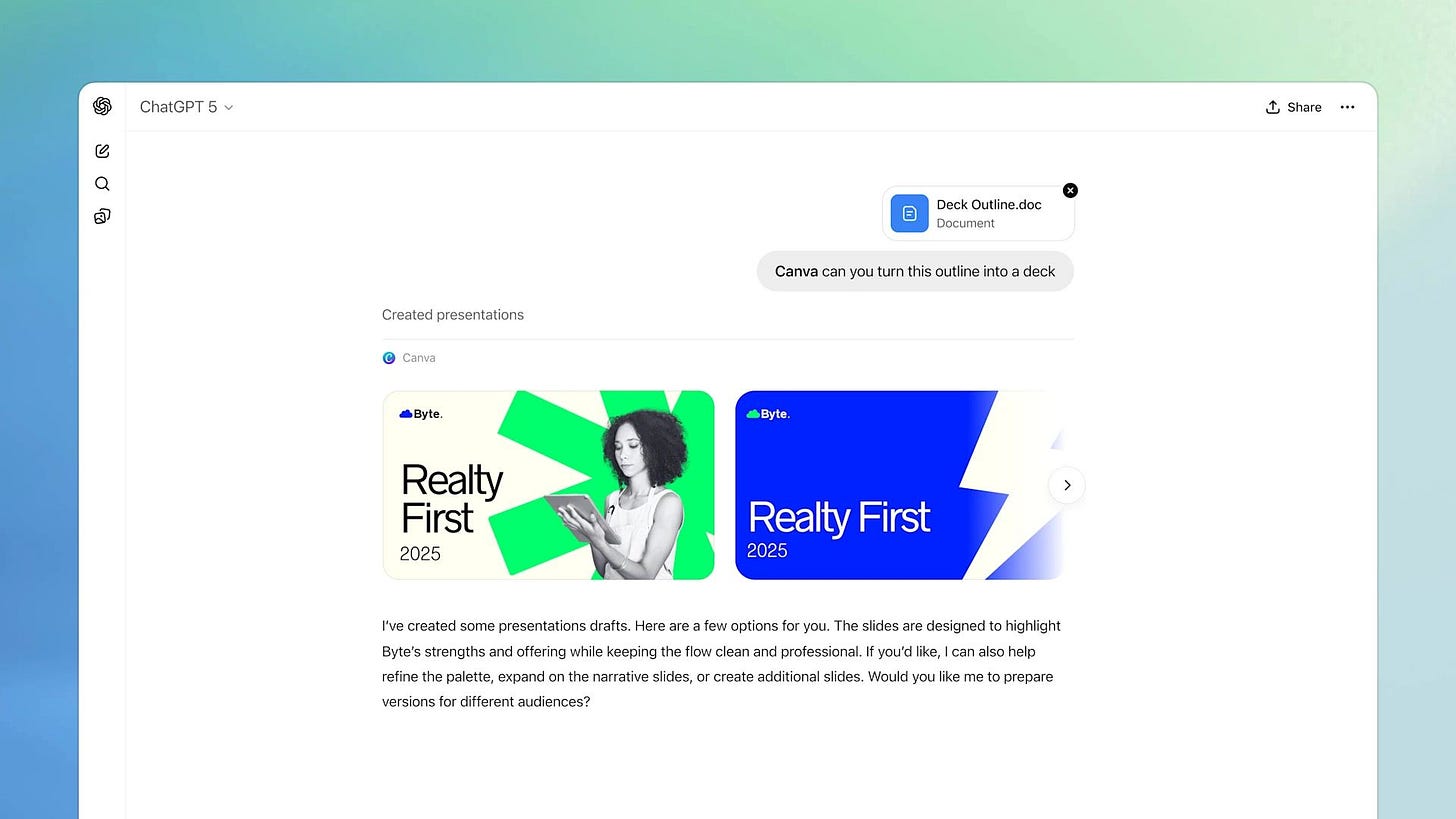

Think about it — with OpenAI embracing apps inside the chat window and Gemini and other coding tools leaning on MCP-style connectors, this first paradigm suggests that you should live in the chat window. The AI’s agentic capability, combined with integration, will get you a result on your behalf. The success metric: the more you talk to these chat interfaces to achieve results outside of the interface itself, the better.

Canva as an app inside ChatGPT

Paradigm 2

On the other hand, Perplexity and Google’s Computer Use team want you to chat to control many interfaces. This second paradigm suggests that you should continue to access a variety of interfaces, but on autopilot. The AI’s agentic capability will do the navigation for you — you can still see it, and it’s still the legacy infrastructure of the web or your operating system. The success metric: the more you talk to these chat-computer-use interfaces to access the rest of the internet, the better.

Gemini 2.5 Computer Use model flow

Now, you might already notice that these converge at some point — a chat interface could rely on computer or browser use to get things done, or an AI browser could embrace third party integrations to skip always having to use a UI.

But at its core here is a central tension that you see being debated amongst developers on X and Hacker News: are we going to continue to build software for humans, or are we going to start writing software that’s mostly oriented for agents?

In the browser and computer-use paradigm, we’re suggesting that the legacy internet is still good — agents should learn to see, navigate, and transact like we do, which also keeps a layer of ‘backward compatibility’ if we continue to build legible UIs. In the chat and integration paradigm, we’re suggesting that the internet is programmable, and that it’s better for us to stop being concerned about the interface and start being focused on transactional pipelines (like APIs and MCPs).

Our take: If you’re building software today, we’re in limbo, and it’s important to embrace limbo. As an example, if you’re starting a new startup, build a beautiful and high-conversion landing page for us all to visit, but include an llms.txt file in your root directory so that AI search bots have a simple file to crawl and learn about your business without needing to parse through your frontend code.

For the foreseeable future, you’ve got two modalities to cater to.

The regulatory pincer: California tightens, Washington threatens

Two stories this week reveal the state of the relationship between tech and government.

California’s transparency play

After signing SB 53 in late September (requiring frontier AI labs to disclose safety testing and incident reporting), yesterday, Gavin Newsom signed AB 656 and AB 566 (link). AB 656 mandates that social media companies ensure that account cancellation triggers a full delete of a user’s personal data, while AB 566 requires that browser companies enable users to opt out of all third party tracking through a universal setting.

What the heck is jawboning

Meanwhile, crazy Ted Cruz held a Senate committee hearing titled “Shut Your App: How Uncle Sam Jawboned Big Tech Into Silencing Americans.” It was a lot of ranting about government pressure on big tech to censor speech — something you can tell that the team at Intent doesn’t think actually happened, no matter what blinged-out Zuckerberg claims on Joe Rogan’s podcast.

The duality

In these two stories, there's a shared reality: tech has largely failed to self-regulate on the issues that matter most to the public (AI safety, content moderation, data privacy), and the U.S. has generally struggled to regulate proactively until problems metastasize.

And the pincer movement from different sides of the aisle at different altitudes matters because it signals that 2026 will see regulation from multiple angles simultaneously:

Blue states will continue adding transparency and safety requirements

Red-led committees will push back on content moderation and "jawboning"

Federal frameworks remain elusive, so the state-by-state patchwork intensifies

If you work in AI safety, trust & safety, or policy at a tech company, you're now navigating contradictory pressures: demonstrate robust safety measures to satisfy California-style regulation, while avoiding anything that looks like government-coordinated content moderation to satisfy Cruz-style scrutiny.

Our take: good luck.

Small bites

Grok 4 lands in Azure AI Foundry – Microsoft leans into serving multi‑model. For buyers, this normalizes “model shopping”; for vendors, it squeezes per‑token margin.

Google puts €5B into Belgian data centers – more European, AI‑tuned capacity and new wind PPA commitments. Translation: AI scale is increasingly energy + real estate.

Stoke Space raises $510M for fully reusable Nova – the reusable rocket startup, aiming to compete with SpaceX's Starship by offering faster turnaround, secured funding led by Industrious Ventures. The private space race continues to heat up, driven by demand for sustainable satellite deployment.

Q3 ’25 venture capital bifurcation: ~46% of global dollars → AI – $45B to AI alone, with 29% to Anthropic; hardware took a distant second. The upshot: non‑AI rounds must be cleaner, cheaper, or category‑defining.

Think a friend could use a dose of Intent? Forward this along – good emails can turn a week around.

Sent with Intent,

Free Agency